by D.H. Stamatis Much has been said about Six

Sigma's design-measure-analyze-improve- control model. With DMAIC, problems are identified and overcome in a process of continual improvement. However, as important as it is to use this method

successfully, we too often tend to avoid the real source of most problems, which is the design phase itself. In the language of Six Sigma, this phase uses the define-characterize-optimize-verify

model as its process.

Six Sigma Glossary DMAIC—design-measure-a nalyze-improve-controlDCOV—define-characterize- optimize-verify PMT—program module

teams QSA—quality system assessment |

|

Most will agree that the verify stage of DCOV presents the most stumbling blocks. With that in mind, this article takes a look at DCOV from both a content and timing perspective in order to

demystify the process. We'll address design review in detail and provide the guidelines that can be used in their own work environments.What are design reviews? The predominant purposes for any design review are to:  Provide a guideline for peer-oriented assessment and coaching Provide a guideline for peer-oriented assessment and coaching

Provide a method to assess design status and implement

corporate timing guidelines of quality and reliability methods Provide a method to assess design status and implement

corporate timing guidelines of quality and reliability methods

Serve as a requirement for assessing and scoring product development. A design review is integral to any of the three component requirements of a quality system for product engineering. The

component requirements are:

Serve as a requirement for assessing and scoring product development. A design review is integral to any of the three component requirements of a quality system for product engineering. The

component requirements are:

- System assessment (e.g., satisfactory score(s) on quality assessment criteria questions)

- Results metrics (e.g., satisfactory trends on the specified engineering results metrics)

- Customer endorsements (e.g., written endorsements from specified engineering customers)

Quality system assessment and product development

As with any other system, along with design review, an organization must have a vehicle for its

assessment. The quality system assessment must be part of the design review and should emphasize

the high-leverage quality and reliability disciplines in the corporate timing schedule. It should be

organized in categories that reflect organizational objectives, and each of those categories should include subsections describing the requirements. One word of warning: Make sure that the

requirements you specify match your organization's culture, values and mission. Applicability and scope

The design review can be applied to:  Product design organizations or activities (e.g., department, section or program module teams).

Product design organizations or activities (e.g., department, section or program module teams).

The PMT approach is particularly relevant if the organization is dealing with specific products.  An organization's internal and external engineering activities An organization's internal and external engineering activities

Design review is intended for all levels of product design activity (e.g., component, subsystem and

complete system programs) and supporting manufacturing engineering activity. It applies equally to large product programs and minor design changes. Design review fundamentals Perhaps the most important characteristics of any design review are:  A focus on engineering for quality and reliability—not on program status A focus on engineering for quality and reliability—not on program status

Peer review by another assigned PMT—not by senior management

Peer review by another assigned PMT—not by senior management

Distributing working documents to a coaching PMT in advance of the review—not "walking in cold and shooting from the hip"

Distributing working documents to a coaching PMT in advance of the review—not "walking in cold and shooting from the hip"

Honest discussion and learning from each other, helping both PMTs to improve Honest discussion and learning from each other, helping both PMTs to improve

Without understanding and internalizing these four fundamentals, a design review is bound to be merely another show in an endless parade of quality tools.

Design review operation An organization's timing schedule is critical for any design review content. Without it, the product

development cycle will function like a sailboat without a rudder. So it's imperative that the timing not

only be in place but also be reasonable, attainable, realistic and measurable. Therefore, the following procedural requirements must be understood and implemented as needed:

1. The quality system assessment should focus the design team's attention on which vital quality and reliability practices to implement for each relevant phase of the timing milestones. (Every

organization must have timing and milestone requirements.) 2. Every PMT should be assigned a "peer coaching" team. These teams assess and coach one

another with respect to the QSA. The assigned peer-coaching pairings should rotate periodically, depending on the organization's timing and product cycle.

3. Quality team leaders should attend each other's PMT meetings in an effort to understand the other team's scope, open issues and current status. This should occur during the first few weeks

after the teams conduct their self-assessment training sessions. Also during this time frame, leaders

should schedule the first design reviews and decide which QSA sections will be assessed at the first design review. This will depend, of course, on the relevant timing and milestone.

4. Usually, two weeks prior to the design review, the quality team to be coached will produce the

appropriate documents required to support the relevant timing milestone. The quality coaching team studies the documents in detail during the following week and prepares constructive comments

relative to the QSA criteria under evaluation. 5. One week prior to the design review, the quality coaching team will review its comments and

critiques with members of the steering team. An agenda for the design review will be produced at this point.

6. The design review is held. No scoring is conducted, only constructive dialogue as to strengths and weaknesses of the team within the framework of the QSA's quality and reliability parameters,

which are found in the initial design review. 7. During the next several months, the teams continue their work, focusing on the relevant timing

disciplines but emphasizing improvement in the weak areas found during the initial design review.

8. The quality teams agree on a second design review date and follow the same preparation process

as in the initial design review. After completing this design review, the peer coaching team leader will

use the questions in the QSA to score and assess the timing quality of the second design review. This will be the design team's score relevant for that moment along the official timeline. The teams will then be assigned new coaching partners, and the cycle begins anew. QSA scoring guidelines Now that we've reviewed some of the overall goals, roles and responsibilities for a typical design

review, let's examine the QSA process. The intent isn't to give a cookbook approach but rather a

series of guidelines for what's important and how to evaluate the design review for optimum results.

It's important to recognize that each design review is unique—as is each organization—and these reviews must be thought out completely.

The very general scoring guideline format shown on page 28 demonstrates the scoring process. Scoring may be used to establish a baseline and measure performance improvement relevant to the

QSA. The scoring may be performed as a self-assessment by an individual or organization, or by peers. The actual cutoff point will depend on a consensus by the individuals involved and, for

obvious reasons, is organization- and product-dependent.

The peer review process

The peer review process provides for assessment and scoring of an engineering organization or

individual by another engineering organization or individual. This review should include coaching and

learning from one peer to another. It's recommended that the initial review by the peer remain confidential. Subsequent reviews should be published to provide an indication of strengths and

weaknesses and to show continual improvement. Although Scoring Guidelines shows an overview of the guidelines, they're not functional as listed.

For useful guidelines, the criteria must be measurable and at least somewhat specific. The rest of this

article will present guidelines for each stage of the design review process. These four stages are: defining product and process, designing product and process, verifying product and process, and

managing the program. Defining product and process

The objective here is to establish or prioritize customer wants, needs, delights and so on.

Therefore, the requirements are to identify customers and establish or prioritize these wants, needs,

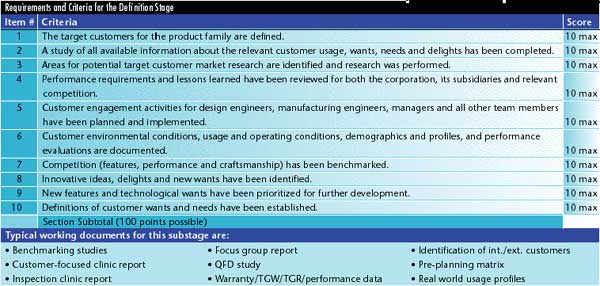

delights, real-world usage profiles and demographics. To do that, points are developed for each question and weighed against both each other and the other subcategories. (See page 28.) The

actual numerical scheme isn't as important as the differentiation and understanding of the questions

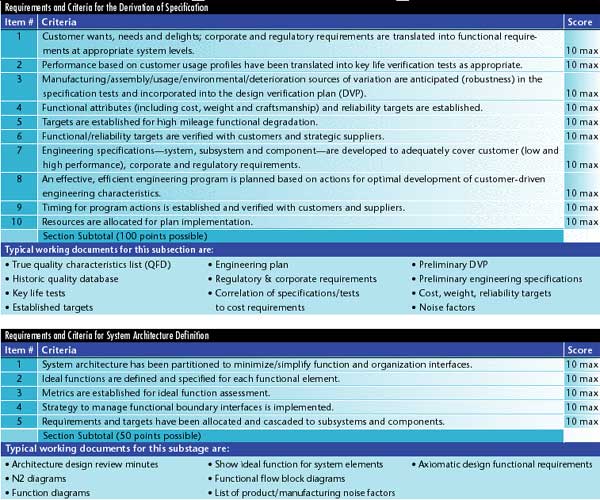

asked. In our example on page 28, each question is given 10 points for a total of 100 subsection points. A minimum score of 60 is expected, but a good score is anything higher than 85. The second subsection of the definition stage is deriving the customer-driven specification—purely

an engineering task. The requirements here are to translate customer, corporate and regulatory functions; make appropriate trade-offs; and establish product or process specifications (i.e.,

requirements) and engineering test plans. The specific criteria are shown below. Note that each item

is again weighed on a 10-points-per-question rating for a total of 100 points. Here, too, the actual

numerical values aren't as significant as the rationale and weighing process for differentiation. Any

numerical scheme can be used, as long as the outcome satisfies the objective. A minimum score of 70 is expected. Items one and two must have a minimum value of nine points each. Again, a good

score is anything higher than 85. The third subsection of the definition stage is defining the system architecture and function. This,

too, is purely an engineering task. The requirements here are to define system architecture, inputs/outputs and ideal function for each system element, and also to identify interfaces. Interfaces

are very critical in the ultimate design because they present challenges from interaction. The classical

interface opportunities are due to the physical proximity of different items, information transfer, different material compatibility and energy transfer.

The specific criteria for this subsection are shown at the bottom of page 30. Note that each item is

weighed on a 10-points-per-question scale for a total of 50 points. Again, the actual numerical

values aren't as significant as the rationale and weighing process for differentiation. One may use any

numerical scheme as long as the outcome satisfies the objective. In this case, a minimum score of 30

is expected. Questions two and three must have minimum values of nine points each. A good score is anything higher than 40.

Part two of this article will appear in next month's issue.

About the author D.H. Stamatis, Ph.D., CQE, CMfgE, is president of Contemporary Consultants. He

specializes in quality science and is the author of 20 books and many articles about quality. This article is based on his recent work in Six Sigma and Beyond, a seven-volume resource on

the subject. More about design for Six Sigma may be found in volume six of that work, which is published by CRC Press. E-mail Stamatis at dstamatis@qualitydigest.com

. Comments on this article can be sent to letters@qualitydigest.com . |